Executive Summary

How can policymakers credibly reveal and assess intentions in the field of artificial intelligence? AI technologies are evolving rapidly and enable a wide range of civilian and military applications. Private sector companies lead much of the innovation in AI, but their motivations and incentives may diverge from those of the state in which they are headquartered. As governments and companies compete to deploy evermore capable systems, the risks of miscalculation and inadvertent escalation will grow. Understanding the full complement of policy tools to prevent misperceptions and communicate clearly is essential for the safe and responsible development of these systems at a time of intensifying geopolitical competition.

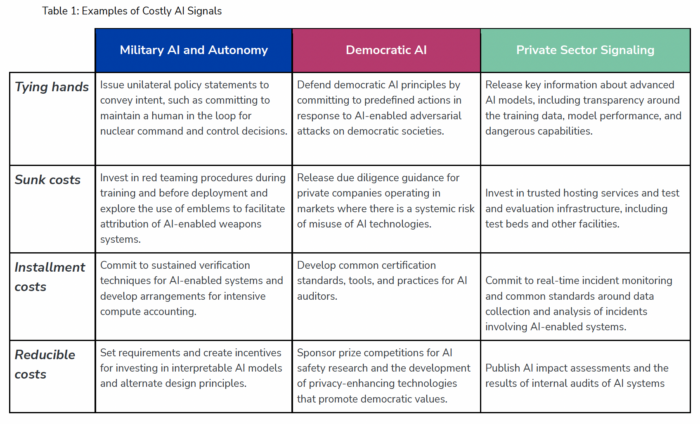

In this brief, we explore a crucial policy lever that has not received much attention in the public debate: costly signals. Costly signals are statements or actions for which the sender will pay a price —political, reputational, or monetary—if they back down or fail to make good on their initial promise or threat. Drawing on a review of the scholarly literature, we highlight four costly signaling mechanisms and apply them to the field of AI (summarized in Table 1):

- Tying hands involves the strategic deployment of public commitments before a foreign or domestic audience, such as unilateral AI policy statements, votes in multilateral bodies, or public commitments to test and evaluate AI models;

- Sunk costs rely on commitments whose costs are priced in from the start, such as licensing and registration requirements for AI algorithms or large-scale investments in test and evaluation infrastructure, including testbeds and other facilities;

- Installment costs are commitments where the sender will pay a price in the future instead of the present, such as sustained verification techniques for AI systems and accounting tools for the use of AI chips in data centers;

- Reducible costs are paid up front but can be offset over time depending on the actions of the signaler, such as investments in more interpretable AI models, commitments to participate in the development of AI investment standards, and alternate design principles for AI-enabled systems.

We explore costly signaling mechanisms for AI in three case studies. The first case study considers signaling around military AI and autonomy. The second case study examines governmental signaling around democratic AI, which embeds commitments to human rights, civil liberties, data protection, and privacy in the design, development, and deployment of AI technologies. The third case study analyzes private sector signaling around the development and release of large language models (LLMs).

Costly signals are valuable for promoting international stability, but it is important to understand their strengths and limitations. Following the Cuban Missile Crisis, the United States benefited from establishing a direct hotline with Moscow through which it could send messages. In today’s competitive and multifaceted information environment, there are even more actors with influence on the signaling landscape and opportunities for misperception abound. Signals can be inadvertently costly. U.S. government signaling on democratic AI sends a powerful message about its commitment to certain values, but it runs the risk of a breach with partners who may not share these principles and could expose the United States to charges of hypocrisy. Not all signals are intentional, and commercial actors may conceptualize the costs differently from governments or industry players in other sectors and countries. While these complexities are not insurmountable, they pose challenges for signaling in an economic context where private sector firms drive innovation and may have interests at odds with the countries in which they are based.

Given the risks of misperception and inadvertent escalation, leaders in the public and private sectors must take care to embed signals in coherent strategies. Costly signals come with tradeoffs that need to be managed, including tensions between transparency for signaling purposes and norms around privacy and security. The opportunities for signaling credibly expand when policymakers and technology leaders consider not only whether to “conceal or reveal” a capability, but also how they reveal and the specific channels through which they convey messages of intent. Multivalent signaling, or the practice of sending more than one signal, can have complementary or contradictory effects. Compatible messaging from public and private sector leaders can enhance the credibility of commitments in AI, but officials may also misinterpret signals if they lack appropriate context for assessing capabilities across different technology areas. Policymakers should consider incorporating costly signals into tabletop exercises and focused dialogues with allies and competitor nations to clarify assumptions, mitigate the risks of escalation, and develop shared understandings around communication in times of crisis. Signals can be noisy, occasionally confusing some audiences, but they are still necessary.