Policymakers and the public are concerned about the rapid adoption and deployment of AI systems. To address these concerns, governments are taking a range of actions, from formulating AI ethics principles to compiling AI inventories and mandating AI risk assessments. But efforts to ensure AI systems are safely developed and deployed require a standardized approach to classifying the varied types of AI systems.

This need motivated CSET, in collaboration with partners at OECD and DHS, to explore the potential of frameworks to enable human classification of AI systems. Building the frameworks involved the following two-step process:

- Identify policy-relevant characteristics of AI systems (e.g., autonomy) to be the framework dimensions;

- Define a set of levels for each dimension (e.g., low, medium, high) into which an AI system can be assigned.

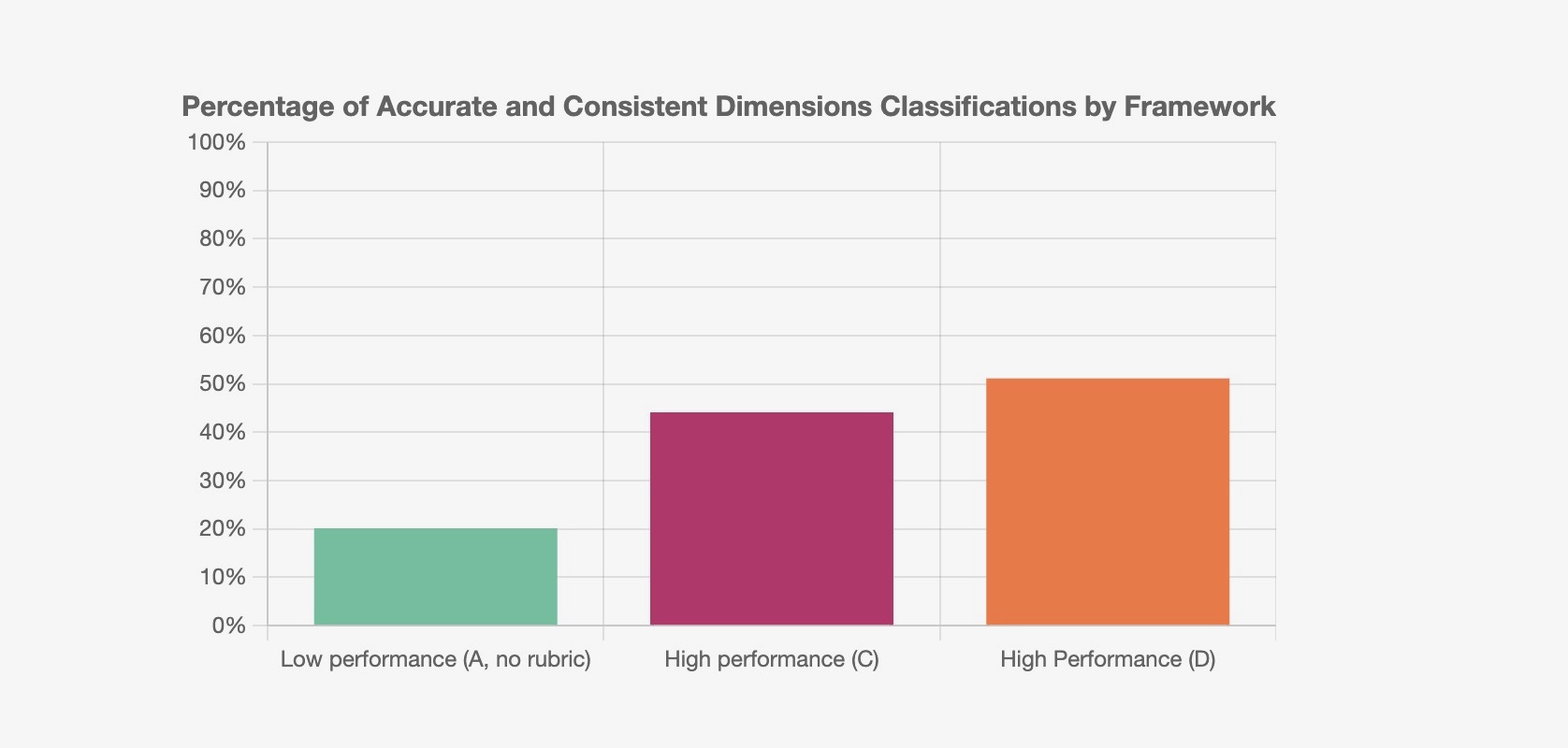

To test the usability of four resulting frameworks, CSET fielded two rounds of a survey experiment where more than 360 respondents completed more than 1,800 unique AI system classifications using the frameworks. We compared user classification consistency and accuracy across frameworks to provide insight into how the public and policymakers could use the framework and what frameworks may be most effective. We recently published a report that explores the development and testing of the frameworks in more detail.

This interactive complements that report by allowing readers to explore the frameworks, review framework-specific classification performance, and even try classifying a few systems. It includes key takeaways from the study and provides the definitions, rubrics, and classification results for the four tested frameworks: A, B, C and D.