Executive Summary

Harms from the use of artificial intelligence systems (“AI harms”) are varied and widespread. Monitoring and examining these harms (AI harm analyses) are a critical step towards mitigating risks from AI. Such analyses directly inform AI risk mitigation efforts by improving our understanding of how AI systems cause harm, enabling earlier detection of emerging types of harm, and directing resources to where prevention is needed most.

This paper introduces the CSET AI Harm Framework, a standardized conceptual framework to support and facilitate analyses of AI harm. This framework improves the comparability of harm monitoring efforts by providing a common foundation that consistently identifies AI harms, while providing modularity to adapt to different analytical needs.

The CSET AI Harm Framework lays out the key elements required for the identification of AI harm, their basic relational structure, and definitions without imposing a single interpretation of AI harm. Specifically, this framework:

- Defines “AI harm’’ as when an entity experiences harm (or potential for harm) that is directly linked to the behavior of an AI system.

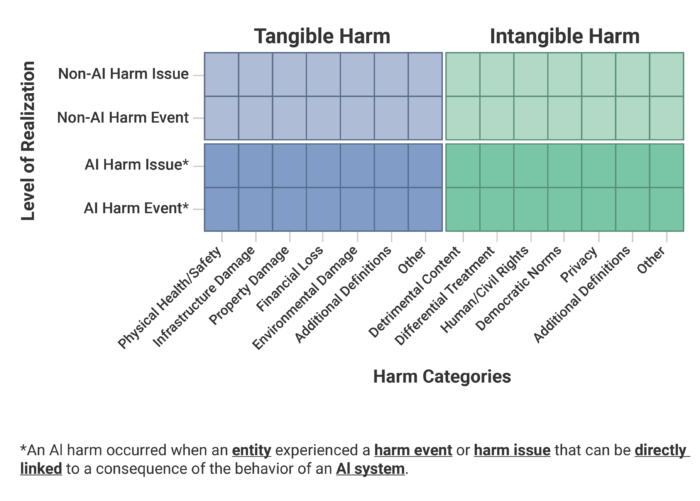

- Groups harm into either tangible or intangible harm. Tangible harm is harm that is observable, verifiable, and definitive. Intangible harm is harm that cannot be directly observed or does not have any material or physical effect. Because of its observability, tangible harm is inherently easier to detect and identify. This means that tangible harm data is more consistent, less noisy, and easier to analyze.

- Allows users to define additional categories of tangible and intangible harm. The CSET AI Harm Framework provides some common categories of harm, such as harm to physical health or safety, financial loss, property damage, detrimental content, bias and differential treatment, and violation of privacy, human and civil rights, or democratic norms. This framework also allows for the inclusion of new categories since new harm types could emerge in the future or be more relevant in another incident data-source.

- Distinguishes harm that actually occurred from harm that may occur. Parsing and differentiating between harm that occurred and may occur allow for the tracking of realized harms, while also enabling research and analysis on potential harms that are risks and vulnerabilities.

In addition to providing introductions to the definitions and concepts of the CSET AI Harm Framework, this report also:

- Discusses how users can adapt the framework. In order to apply the framework to data, users should create a customized framework. This requires specifying the framework’s components to such a degree that it can be used to extract all the information needed to identify and characterize AI harms according to the user’s analytic interests and the limitations of the data source.

- Provides an example customized framework. As an example, this report shows how the CSET AI Harm Framework was customized for use in the CSET AI Harm Taxonomy for AIID. Since modifications and definitions are centrally documented in the CSET taxonomy, database users are able to retrace the underlying framework and compare it to other taxonomies built on the CSET AI Harm Framework.

- Details future additions to the framework. Future versions of the CSET AI Harm Framework will incorporate content on the severity and spread of AI harm. When combined, these factors can inform the aggregated impact of a particular harm.

More detailed annotation guidelines are available on GitHub in the CSET AI Harm Taxonomy for AIID and Annotation Guide.