Executive Summary

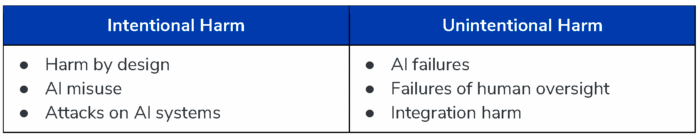

With recent advancements in artificial intelligence—particularly, powerful generative models—private and public sector actors have heralded the benefits of incorporating AI more prominently into our daily lives. Frequently cited benefits include increased productivity, efficiency, and personalization. However, the harm caused by AI remains to be more fully understood. As a result of wider AI deployment and use, the number of AI harm incidents has surged in recent years, suggesting that current approaches to harm prevention may be falling short. This report argues that this is due to a limited understanding of how AI risks materialize in practice. Leveraging AI incident reports from the AI Incident Database, it analyzes how AI deployment results in harm and identifies six key mechanisms that describe this process (Table 1).

Table 1: The Six AI Harm Mechanisms

A review of AI incidents associated with these mechanisms leads to several key takeaways that should inform AI governance approaches in the future.

- A one-size-fits-all approach to harm prevention will fall short. This report illustrates the diverse pathways to AI harm and the wide range of actors involved. Effective mitigation requires an equally diverse response strategy that includes sociotechnical approaches. Adopting model-based approaches alone could especially neglect integration harms and failures of human oversight.

- To date, risk of harm correlates only weakly with model capabilities. This report illustrates many instances of harm that implicate single-purpose AI systems. Yet many policy approaches use broad model capabilities, often proxied by computing power, as a predictor for the propensity to do harm. This fails to mitigate the significant risk associated with the irresponsible design, development, and deployment of less powerful AI systems.

- Tracking AI incidents offers invaluable insights into real AI risks and helps build response capacity. Technical innovation, experimentation with new use cases, and novel attack strategies will result in new AI harm incidents in the future. Keeping pace with these developments requires rapid adaptation and agile responses. Comprehensive AI incident reporting allows for learning and adaptation at an accelerated pace, enabling improved mitigation strategies and identification of novel AI risks as they emerge. Incident reporting must be recognized as a critical policy tool to address AI risks.