Continuing our exploration of past CSET surveys, we now take a look at a 2019 CSET survey examining AI professionals’ willingness to work on U.S. Department of Defense projects. Others have subsequently published research on the same subject, including a 2022 RAND Corporation report. Based on a 2021 survey, it sheds additional light on the views of technical staff and software engineers in the U.S. private sector toward potential DOD AI applications. Here we highlight findings consistent across both surveys.

While both collected perspectives from tech sector professionals and assessed relational aspects between the DOD and the U.S. tech sector, a few differences in sampling and survey design are noteworthy. CSET sampled professionals with AI-related skills employed at U.S.-based AI companies and collected a total of 164 responses (1.4% response).1 In contrast, the RAND survey sampled private-sector software engineers who graduated from top-ranked computer science universities, worked at major Silicon Valley tech corporations, or worked at defense contractors. RAND collected a total of 1,178 responses (1.1% response).

Both surveys found that many tech professionals are willing to work on DOD projects and are comfortable with many DOD applications of AI. CSET found 38 percent of respondents were positive and 40 percent were neutral toward working on DOD projects. Meanwhile, most respondents in the RAND survey reported being comfortable with a wide variety of potential military applications for AI. RAND respondents did vary in their comfort level with AI applications that involve the use of lethal force, lack of human oversight, reduction in physical proximity to the battlefield, and a higher level of destruction. RAND respondents also were uniformly uncomfortable with unsupervised use of lethal force by AI. While the majority of respondents indicated a willingness and comfortability working on DOD projects, a few respondents at the extreme ends expressed either intense positive or negative perspectives about their willingness to work on DOD projects or extreme concern toward all DOD AI-applications.

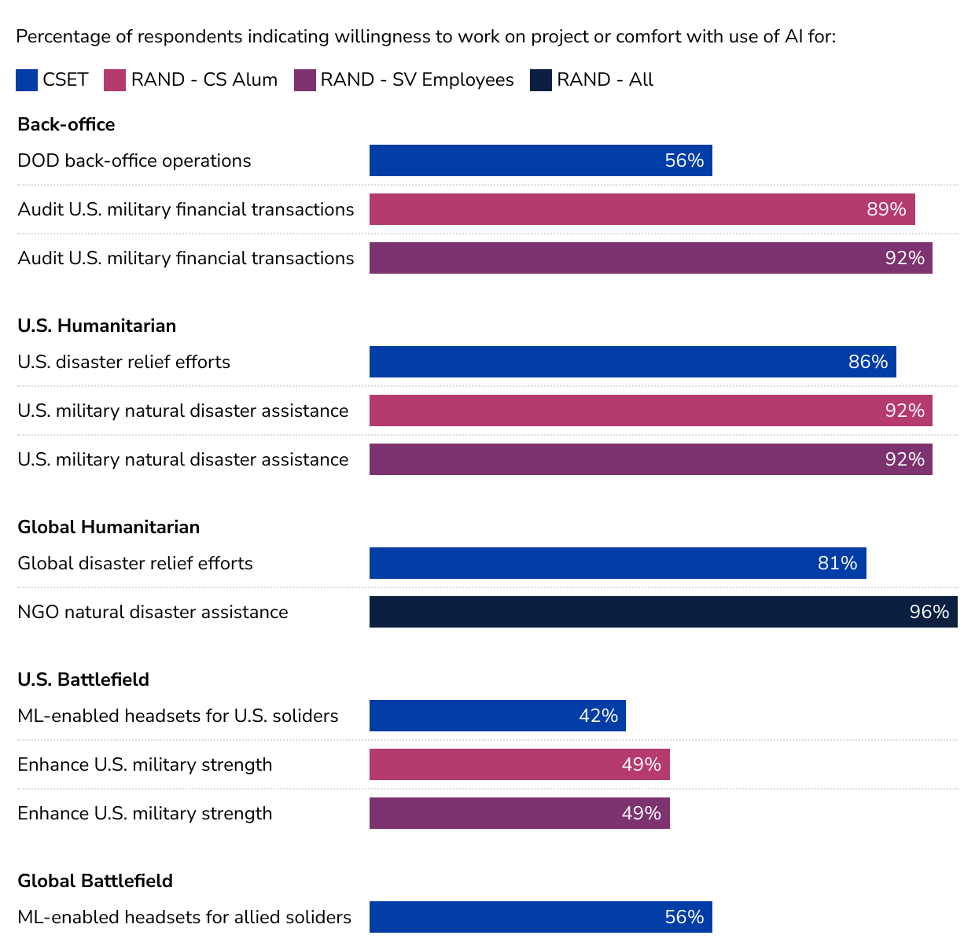

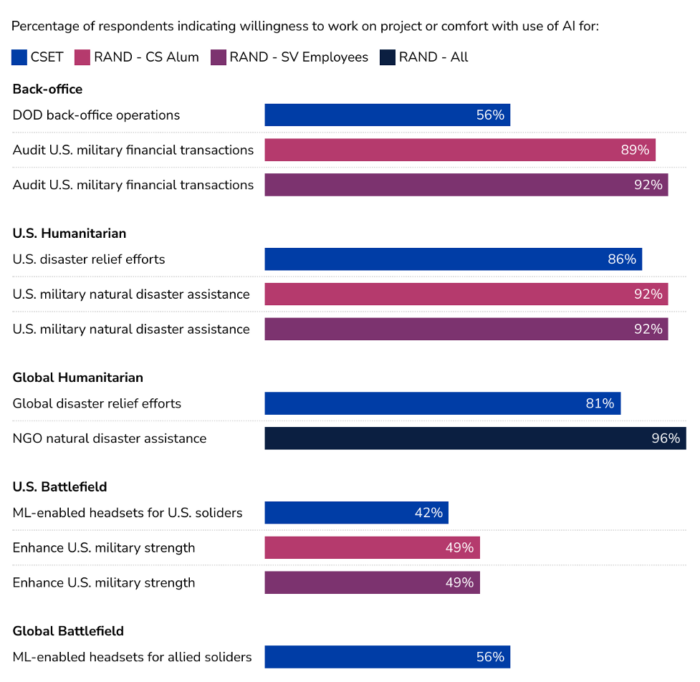

The CSET survey included an embedded experiment, and found that respondents were more comfortable working on projects with humanitarian applications than battlefield ones. Similarly, when RAND researchers asked about a range of applications, they found respondents were “overwhelmingly comfortable” with “Protective and Non Battlefield Uses.”2 These include using AI to audit financial transactions, assist in natural disasters, intervene using force to intercept unmanned weapon systems, and optimize battlefield supply chains. Figure 1 presents the proportion of respondents in these surveys who indicated comfort with these types of AI projects and applications.

Figure 1. Comparison of Respondents’ Comfort Levels with DOD AI Applications and Willingness to Work on DOD Projects.

Across both surveys, tech sector professionals expressed some concerns; as the results show, end use matters. In CSET’s survey, respondents who indicated a lack of willingness noted end use as a concerning factor when deciding to work on projects. More specifically, 59 percent of respondents indicated causing harm as the most convincing reason not to work on DOD-funded projects. The second most-commonly cited concern was lack of decision-making power over items such as publishing decisions, financial incentives, and project objectives. In the RAND survey, AI applications that involved unsupervised lethal force and AI-recommended lethal force with human decision-making sparked significant concern, with 71 percent of respondents expressing discomfort with the former. Despite these concerns, both surveys find some evidence that there are areas in which the tech and defense sectors share a mutual interest in further discussion and collaboration.

One methodology difference highlights the conclusions we can draw from the survey question posed to respondents. RAND participants were asked about their comfort levels with DOD applications of AI, i.e., they were assessed on attitude or belief. CSET’s survey asked respondents about willingness to work on such projects, i.e., they were assessed on behavior. While comfort level is likely a factor in what projects tech professionals are willing to work on, it is not the only deciding factor. While comfort levels with DOD AI applications may vary, taking active steps to work or not work on the various types of projects is a different matter. An assessment of behavior lends insight into what projects tech sector professionals are willing to work on. When paired with CSET’s finding that a majority of respondents regularly think about the impact and implications of the technology projects on which they work, the behavioral assessment suggests that there is room for the tech and defense communities to have a conversation on this topic.

An important takeaway is that both surveys found most tech sector professionals were comfortable and willing to work on DOD projects. Areas of mutual interest and concern showed variation in how respondents felt about end use, which can help inform future DOD-tech sector cooperation

In our next survey Data Snapshot, we will share details of some more recent survey work underway at CSET.

- For more details on sampling methodology see page 8 of “Cool Projects” or “Expanding the Efficiency of the Murderous American War Machine?”

- For more details see page 29 of Exploring the Civil-Military Divide over Artificial Intelligence.