Executive Summary

Organizations have a growing number of tools at their disposal to implement responsible AI systems, or systems that minimize unwanted risks and create beneficial outcomes. However, it is not always clear how to select and apply these tools. This paper provides a way for organizations to systematically characterize one type of tool—namely, process-based frameworks—that accommodates their specific needs. Process frameworks for AI provide a blueprint to ensure that organizations are prepared to meet the challenges and reap the benefits of AI systems. They can help an organization prioritize aspects of system design, build lines of accountability into product development teams, and engage with impacted communities, among many other critical functions. Without an action plan to follow, organizations would struggle to establish the infrastructure, resources, and capabilities needed for responsible AI.

However, process frameworks vary in their level of specificity, with many erring on the side of generality to accommodate flexibility in implementation. Although this can be desirable in certain circumstances, it can be burdensome for organizations that want to choose a framework but lack experience in implementing responsible AI. Devising a standard way of comparing a large number of frameworks takes time and energy that organizations may not have. First, organizations may struggle to determine who can use a framework. While some frameworks name a target audience, many do not. Even when an audience is mentioned, they are commonly described in general terms, which makes it difficult to assign responsibilities for operationalizing a framework. Second, once an audience is identified, it may still be difficult to discern which needs are satisfied by that framework.

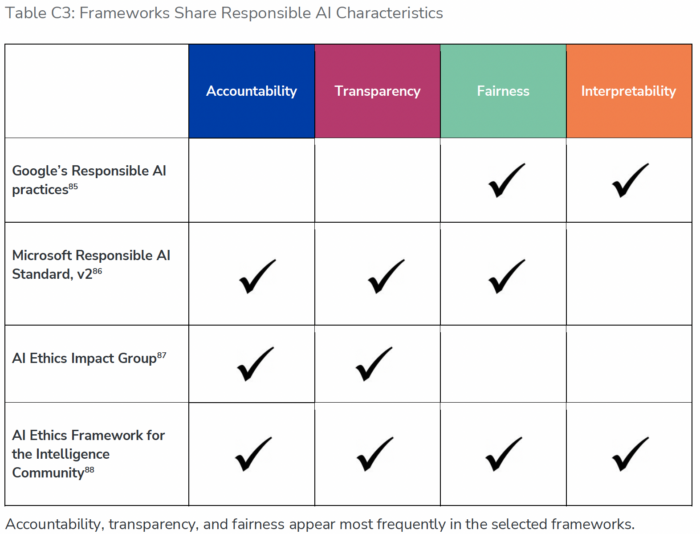

In this brief, we reviewed and organized more than 40 existing responsible AI frameworks put forth by a variety of companies, international organizations, government bodies, and non-governmental organizations and mapped them onto a matrix that can help organizations better understand, select, and implement responsible AI in a way that best fits their needs. The matrix is focused on the user, appealing to the people who are most directly involved in implementing frameworks within an organization that builds or uses AI, namely, the Development and Production team and the Governance team. To help these users select the frameworks that will best serve their needs, the matrix adds a Utility dimension, further classifying frameworks according to their respective areas of focus: an AI system’s components, an AI system’s lifecycle stages, or characteristics related to an AI system. The matrix provides a structured way of thinking about who could benefit from a framework and how that framework could be used, which helps organizations precisely apply frameworks and understand the utility of each framework relative to guidance that already exists.